Apprenticeships

A full-stack skills solution for Data & AI funded by the UK Apprenticeship Levy

Data & AI skills to accelerate business outcomes

Data & AI Practitioner Programmes

Apprenticeship

Data Citizen

Level 3

13 months

Master the essential mindset, tools and skills to thrive in a data-driven organisation.

- The value of data

- Storytelling with data

- Create real-time dashboards

- Automate time spent on spreadsheets

Apprenticeship

Data Analyst

Level 4

14 months

Leverage the analytics power of Python, SQL and introductory machine learning.

- Automate reporting and processes

- Build self-service teams for data

- Practical hackathon

- Specialist electives available

Apprenticeship

Data Engineer

Level 5

14 months

Develop key internal capabilities to raise the usability of critical datasets in your organisation.

- Python programming

- SQL

- Data modelling

- Software testing

Apprenticeship

AI Engineer

Level 6

15 months

Master the skills to operationalise machine learning, build and deploy real-world AI solutions.

- Practical Machine Learning Techniques

- Securing and Optimising ML Systems

- Generative AI and Large Language Models

- Machine Learning in Production

Apprenticeship

AI and Data Science

Level 7

15 months

Build and productionise predictive models by leveraging ML and data science.

- Product management for AI

- Neural networks and deep learning

- Practical hackathon

- Specialist electives available

Transformation Programmes

Apprenticeship

AI Champion

Level 3

13 months

Accelerate your Organisation's AI Transformation and increase productivity through the adoption of Gen AI tools like Microsoft Copilot.

- Identify, use and champion the use of AI tools.

- Support the implementation and roll out of AI solutions.

- Support and continuous improvement.

Apprenticeship

AI Transformation Specialist

Level 4

14 months

Master the skills to leverage AI responsibly, drive accelerated business outcomes and futureproof your career in the AI era.

- AI-driven business analysis and transformation

- Data & AI for business value

- Process Modelling for digital transformation

- Managing Business change and impact

Apprenticeship

Digital Product Manager

Level 4

14 months

Build and manage digital products that meet customer needs and generate growth.

- Data analysis for product management

- User-centric design

- Stakeholder management

- AI for product management

One apprentice can create real business impact

£1.4m revenue identified through data-driven insights

£120,000 saved by creating efficiencies

90% shorter project times achieved through automations

5x faster ML model training achieved through automations

Delivery and learner support designed to maximise impact

We deliver all of our programmes online, helping our clients offer flexible and inclusive programmes open to all of their staff. We believe that the gold standard for online delivery is to offer a mix of experiential learning, coaching, technical mentorship and peer support.

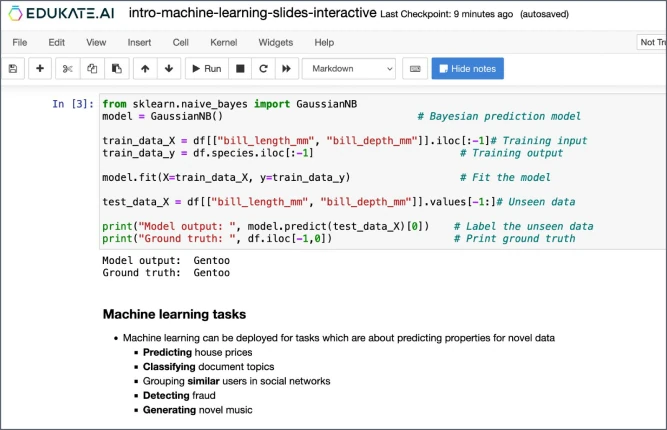

24/7 immediate feedback

EDUKATE.AI is our learning platform designed for data science education which gives learners immediate and personalised feedback on their code.

Fast skills deployment

Learners apply their skills to real datasets from their first day of learning, with assignments on EDUKATE.AI simulating a working industry environment.

Tailored expert curriculum

A modular curriculum developed with leading experts from academia and industry to meet all skills needs in an organisation.

Peer support

Engagement and support from peers through Knowledge Base, our Q&A feature built into EDUKATE.AI.

Easy set up

Our cloud-based platform requires no installation or set-up for our learners, with their content available whenever they need it.

Powering skills development in Data Science

Enquire now

Fill out the following form and we’ll contact you within one business day to discuss and answer any questions you have about the programme. We look forward to speaking with you.